Data center infrastructure is undergoing a massive shift. Virtualization in the data center has had a profound impact on customer expectations of flexibility and agility. Especially as customers get to 70+% virtualized, they have the potential to realize tremendous operational savings by consolidating management in their virtualization framework.

In this state, customers typically do not want to deploy physical appliances and want everything handled from their virtualization context. Similar changes in networking and storage have meant that the basic infrastructure is now completely in software running on generic hardware. This is the software-defined data center, and VPLEX is no stranger to this conversation.

***

VPLEX has been no stranger to this conversation. Especially given the very strong affinity of VPLEX to VMware use cases, customers have been asking us for a software-only version of VPLEX. That is precisely what we have done. This past week, we launched VPLEX Virtual Edition, which will GA toward the end of Q2.

What is the VPLEX Virtual Edition and what does it do?

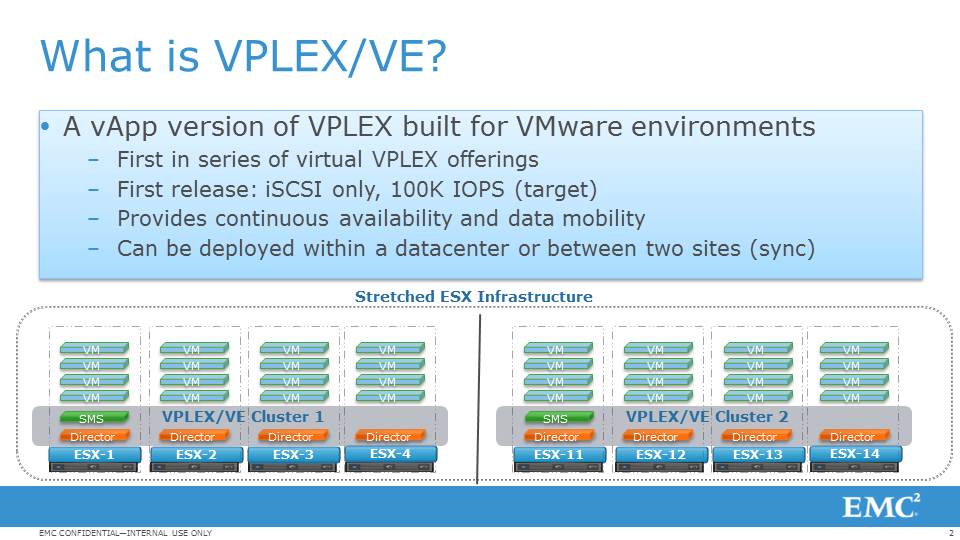

The VPLEX Virtual Edition (VPLEX/VE) is a vApp version of VPLEX designed to run on an ESX Server Environment to provide continuous availability and mobility within and across data centers. We expect this to be the first in a series of virtual offerings. In comparison to the appliance, all the VPLEX directors are converted into vDirectors. For the first release, the configuration we support is called the ‘4×4’ – this will support four vDirectors on each side of a VPLEX Metro. From a configuration standpoint, that is the equivalent of two VPLEX engines on each side of a VPLEX Metro cluster. Each side of VPLEX/VE can be deployed within or across data centers up to 5 msec apart.

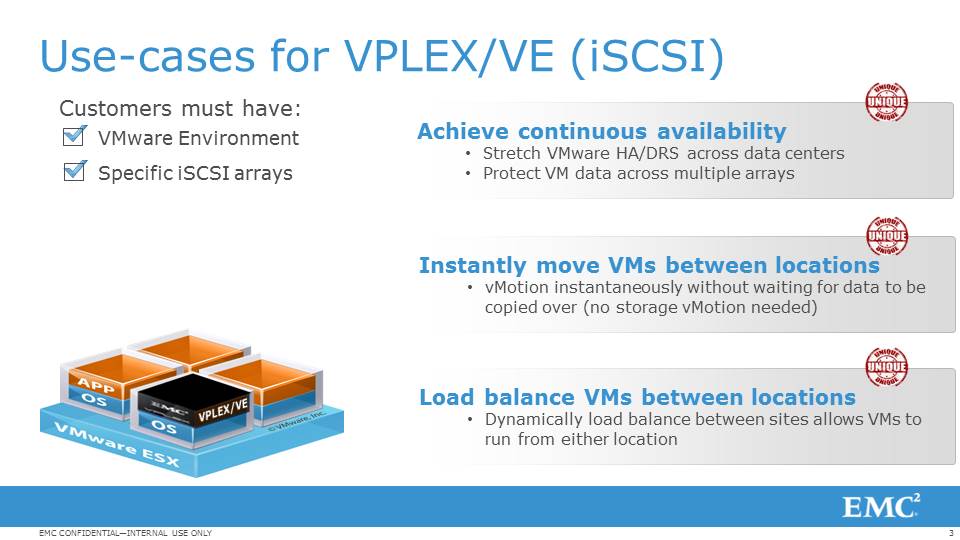

VPLEX/VE supports iSCSI for front-end and back-end connectivity. For the initial release, we have decided to support only the VPLEX Metro equivalent use-cases. Most of the VPLEX Local related use-cases can be addressed by a combination of vMotion and storage vMotion. To list the use-cases:

- The ability to stretch VMware HA / DRS clusters across data centers for automatic restart and protecting VMs across multiple data arrays

- Load balancing of virtual machines across data centers

- Instant movement of VMs across distance

From a performance perspective, VPLEX/VE is targeted up to a 100K IOPS workload. Obviously, the true performance will depend on your workload. The deployment is designed to be customer installable from the get go. There is an installation wizard that guides you all the way through the installation. When GAd, please refer to the release notes to determine what kind of ESX Servers are supported for VPLEX/VE. The vDirectors need to be loaded onto separate ESX Servers such that no two vDirectors are deployed on the same ESX server. This is done so as to give the system maximum availability. Running application VMs on the same ESX server as that running the vDirector is supported. This means that you should be able to use your existing ESX servers (subject to the minimum requirement that will be established for the vDirectors).

The way that an I/O will flow is from the application VM (via iSCSI) to the VPLEX/VE vDirector VM and from there to the iSCSI array connected to VPLEX/VE. Speaking of which, right out of the chute, we support VNXe arrays. We will add other iSCSI arrays over time.

With VPLEX/VE, we have had the opportunity to do a lot of things differently. One of our guiding principles was to not think of it as a storage product but rather to think of it as a product designed for VMware environments and targeted to an ESX Administrator. Naturally, I cannot wait to see this get into our customers hands and to see whether we have hit our marks and what adjustments are needed.

Equally importantly, this is a strategic imperative within EMC. You can expect to see a lot more of our product portfolio embarking on the software defined journey. There are a lot of intersects within the portfolio that we have only begun to explore (HINT: Composing software is a lot easier than composing hardware!).

Frequently Asked Questions

Since launch, I have seen a ton of questions on twitter, on internal mailing lists and via people directly or indirectly reaching out to me. So, here are the collated answers:

- Is VPLEX/VE available right now?

- A: VPLEX/VE will GA towards the end of Q2.

- Will VPLEX/VE support non-EMC arrays?

- A: As with VPLEX, we expect to qualify additional EMC and non-EMC arrays over time based on customer demand. Expect new additions fairly quickly after GA

- Will I be able to connect VMs from ESX clusters that are not within the same cluster as the one hosting VPLEX/VE?

- A: No

- Will I be able to connect non-VMware ESX hosts to VPLEX/VE?

- A: At this point, we only support VMware iSCSI hosts connecting to VPLEX/VE. This is one of the reasons the management has been designed within the vSphere Web Client

- Can I connect VPLEX/VE with VPLEX?

- A: VPLEX/VE is deployed as a Metro equivalent platform (i.e. both sides). Connecting to VPLEX is not supported. If there are interesting use-cases of this ilk, we would love to hear from you. Please use the comments section below and we can get in touch with you.

- Is RecoverPoint supported with VPLEX/VE>

- A: Not today. So, I am explicit – the MetroPoint topology which also launched last week is also not supported with VPLEX/VE

- Is VPLEX/VE supported with ViPR?

- A: At GA, ViPR will not support VPLEX/VE. Both the ViPR and VPLEX/VE teams are actively looking at this.

- Does VPLEX/VE support deployment configurations other than a 4×4?

- A: Currently, 4×4 is the only allowed deployment configuration. Over time, we expect to support additional configurations primarily driven by additional customer demand.

- Will VPLEX/VE be qualified under vMSC (vSphere Metro Storage Cluster)?

- A: Yes.

If you are interested in a Cliff’s note version of this, here is a short video that Paul and I did to walk through the virtual edition: