As Dell Technologies continues to create more technologies that drive human progress, there can be obstacles that slow the adoption of these new solutions. In the data center, there is no more important area to demonstrate these hurdles than AI workloads. AI and other demanding workloads mandate the use of the latest GPUs and CPUs to deliver the required application performance. This means thermal and power questions often arise during deployment planning. To help, Dell’s server thermal engineering team has been delivering Dell Smart Cooling, a customer-centric collection of innovations, for many years. Triton, for example, was an early liquid-cooled server product from 2016. If we fast forward to 2024, we’re supplying server cooling solutions, like the Dell DLC3000 DLC rack that Verne Global is using and the Dell modular data centers that offer up to 115 kW per rack.

Current Cooling Choices

Previous blogs have covered the cooling requirements of the latest CPUs and GPUs and the different cooling options supported by the PowerEdge portfolio. Deploying these latest high-powered servers can mean the amount of heat generated per rack exceeds cooling handled by traditional air-cooling. In addition, customers are looking to be more sustainable and more efficient with power usage in the data center. So, let’s look at data center cooling methodologies and strategies available to customers today to support these increasing cooling demands.

Here’s a quick overview of the most common technologies used as building blocks when architecting a data center cooling environment.

-

- Direct liquid cooling (DLC) uses cold plates in direct contact with internal server elements such as CPUs and GPUs; liquid is then used to cool the cold plate and transport heat away from these processors.

- In-row cooling solutions are designed to be deployed within a data center aisle alongside racks to cool and distribute chilled air to precise locations.

- Rear door heat exchangers (RDHx) work by capturing the heat from servers’ hot exhaust air via a liquid-cooled heat exchanger installed on the rear of the server rack.

- Enclosure refers to the concept of containment of heated exhaust air, cooling it and recirculating it, all completely isolated away from any another data center chilled air.

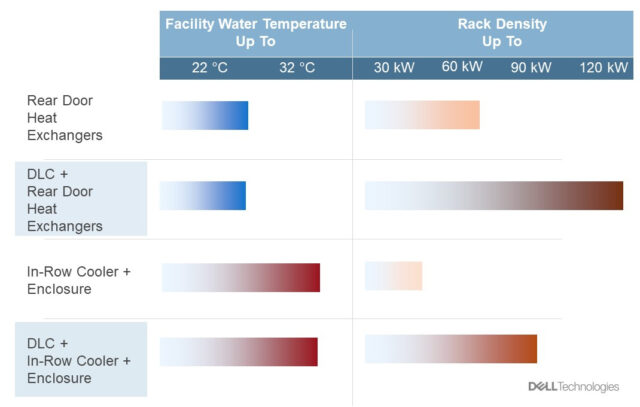

Each cooling technology supports different rack thermal density and efficiencies, giving customers choices to match the cooling solution to their requirements. These solutions can be deployed from one rack to multiple aisles. In-row coolers, combined with row or rack containment, captures 100% of the IT-generated heat at the rack(s). This means that the only air conditioning required in the data hall is for human comfort. RDHx also captures 100% of the IT-generated heat to facility water at the rack and condition the air in the space at the same time. Because of this air-conditioning function, the facility water temperature provided to RDHx must be cooler (up to approximately 20 C) than what can be used with in-row coolers (up to 32 C). Higher facility water temperatures allow the chillers that cool the water to operate with lower energy, which is desirable, but only part of the whole efficiency story.

Combining these 100% heat capture technologies with DLC increases efficiency even more by decreasing the fan power required to cool the IT equipment.

Server Cooling Efficiency

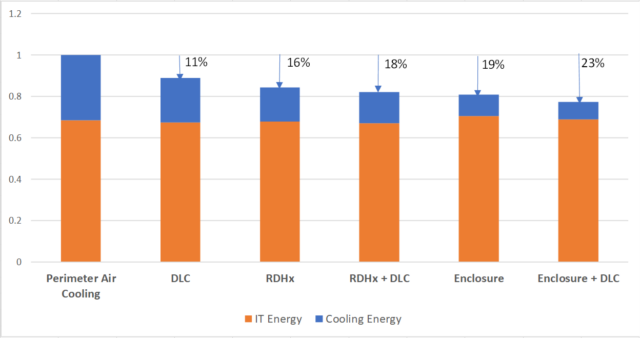

These different solutions and methods consume differing amounts of power to deliver cooling. Figure 2 highlights annual energy usage for different cooling methods when used to cool a typical rack of dual-CPU servers. The bars show the IT energy and cooling energy for each cooling approach. IT energy consumed includes everything inside the server, including internal fans. Cooling energy represents cooling items outside the server starting at the CDUs (coolant distribution unit) or CRAHs (computer room air handler) and including an air-cooled chiller outside the data center. This model is specifically for a data center located in the Southern United States.

The first bar represents a typical data center that uses air handlers stationed around the perimeter of the data hall blowing air towards the servers. Next, adding DLC to cool the CPUs in each server can save about 11% of the total energy consumed by air-cooling only with perimeter air handlers. Replacing the perimeter cooling with rear door heat exchangers (RDHx) on each rack can save 16% annually, and adding DLC saves another 2% beyond that. As noted above, deploying IT in an enclosure with an in-row cooler permits warmer water to be used, and this brings a 19% energy savings over perimeter air handlers. Finally, combining this enclosure with DLC saves 23% of the energy consumed by traditionally cooled racks.

The Benefits of Dell Technologies Solutions

There are several alternative cooling methods in the marketplace. For example, some vendors have chosen to use direct liquid cooling on additional internal server components including memory, network interfaces, storage, etc. meaning the DLC solution is in contact with almost all heat-producing components inside each server. Often these solutions require custom copper cold plates and additional piping internal to the server to put all the components in contact with the liquid. At Dell, we don’t believe costly complex copper cooling is the best approach. We believe organizations can achieve many benefits by combining both liquid and air cooling into a hybrid server cooling solution, including:

-

- Much greater flexibility in server configurations. Customers can decide the server configuration (memory/PCIe cards/storage/etc.) without being bound to one server cold plate design.

- Designs with far fewer hoses and joints where leaking may occur.

- Simple on-site service procedures with easy access to replace server components.

- Selection of a broad range of servers.

Dell’s hybrid approach is less complicated, enabling greater agility in cooling new and different processors and server platforms as they become available.

Analysis using Dell’s in-house models show that that the hybrid air + DLC cooled deployment in a well-designed, well-managed low water temperature solution can use just 3% to 4% more energy in cooling than the “cold plate everything approach” used by some other vendors and bring the benefits listed above.¹

Harness the Next Generation of Smart Cooling

Dell continues its cooling strategy of being open and flexible to offer customers choice rather than a one-size-fits-all approach. These advanced data center cooling methods are now moving from high performance compute clusters to mainstream deployments to enable delivering the next generation of peak performing servers supporting AI and other intense workloads. Dell’s smart cooling is already helping many PowerEdge customers enhance their overall server cooling, energy efficiency and sustainability. Come and talk with the cooling experts in the PowerEdge Expo area at Dell Technologies World or ask your account team for a session with data center cooling subject matter expert.

1 Based on in-house modelling using data collected by Dell thermal team, January 2024.