Back in 2003, Google published its  vision for a scale-out, resilient, petabyte scale filesystem called the Google File System. This inspired the creation of an open-source alternative called Hadoop Distributed Filesystem (HDFS), which has been a catalyst for Hadoop’s phenomenal growth in the past few years.

vision for a scale-out, resilient, petabyte scale filesystem called the Google File System. This inspired the creation of an open-source alternative called Hadoop Distributed Filesystem (HDFS), which has been a catalyst for Hadoop’s phenomenal growth in the past few years.

With Hadoop’s increased enterprise adoption, there is greater need to protect business critical datasets in Hadoop clusters. This is motivated in large part by compliance, regulation, data protection and business continuity planning.

In this blog, the first in a series about the Hadoop ecosystem and ECS, let us talk about the need for disaster recovery and geo-capabilities and provide a glimpse into EMC Elastic Cloud Storage (ECS)’s unique position to address this.

State of the Union

Traditionally, HDFS provides robust protection against disk failures, node failures and rack failures. The mechanisms to protect data against entire datacenter failures and outages leave much to be desired. The distcp tool built-in to the Hadoop distribution has been the foundation for most Hadoop vendor backup and recovery solutions. Distcp is a tool to perform inter-cluster data transfer usually to a passive back-up cluster. It initiates periodic, recurring MapReduce jobs to move datasets over, which results in inconsistent views of data and consumes compute resources on the source cluster. Implementing this scheme becomes exponentially more challenging when more than 2 sites are involved!

Enter ECS

ECS provides a cloud-scale, geo-distributed, software-defined object-based storage platform that is perfect for unstructured data, Big Data applications and Data Lakes. ECS offers all the cost advantages of the public cloud running on commodity infrastructure while also providing enterprise reliability, availability and serviceability.

ECS features an unstructured storage engine that supports multiple-protocol access on the same data. (via protocols like Amazon S3, OpenStack Swift, EMC Atmos, Centera CAS, Hadoop HDFS, etc.) and in the near future, File protocols such as NFS.

ECS delivers an HDFS service that provides a comprehensive storage backend for Hadoop analytics clusters. ECS HDFS exposes itself to Hadoop as a Hadoop Compatible File System or HCFS as it is known in the Hadoop community. Using a drop-in client on the Hadoop compute nodes, organizations can extend existing Hadoop queries and applications to unstructured data in ECS to gain new business insights without the need of ETL (Extract, Transform, Load) process to move the data to a dedicated cluster.

Geo-Distributed Hadoop Storage and Disaster Recovery with EMC ECS

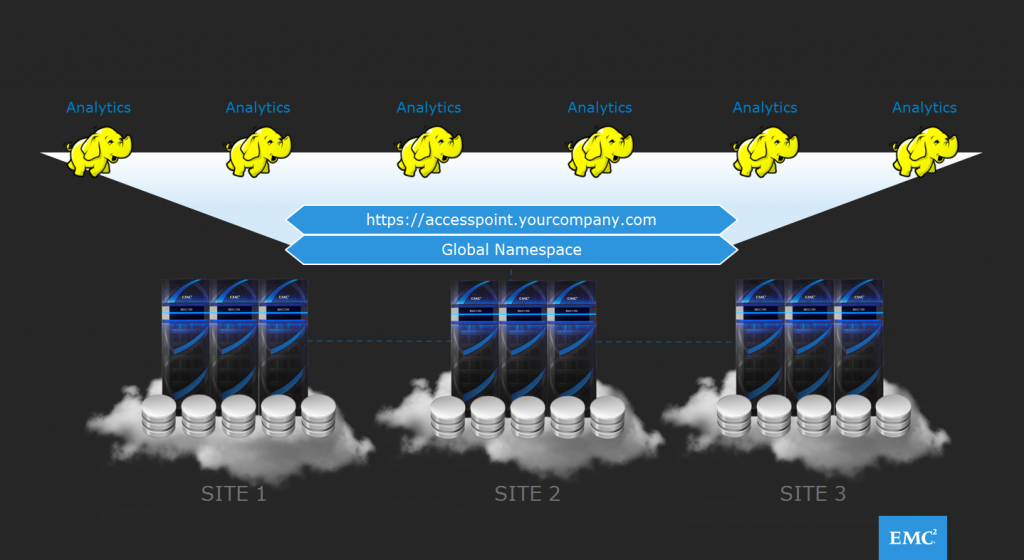

ECS has been architected from the ground-up to operate across geographically distributed deployments as a single, coherent system. This makes it an enterprise-hardened, vendor-agnostic Hadoop storage platform capable of multi-site analytics, disaster recovery and continuous availability.

Traditional Hadoop backup strategies using active-passive clusters lead to under-utilized, standby, read-only resources on the backup cluster. Multiple geographically distributed ECS deployments can be configured as an active-active system; capable of simultaneous read and write access to the same set of data while ensuring strong consistency. The operational word being ‘strong!’ Applications see the same view of data via any replicated ECS site, regardless of where the data was last written!

ECS’s geo-replication ensures that the data is protected against site failures and disasters. ECS gives customers the option to link geographically dispersed systems and bi-directionally replicate data among these sites across WAN. Several smart strategies such as geo-caching are used to reduce WAN traffic for data access.

That leads to the next natural question: If data is replicated to multiple sites, will I incur a large storage overhead? In order to reduce the number of copies in a multi-site deployment, ECS implements a data chunk contraction model, which dramatically reduces storage overhead in multi-site ECS environments. In fact, storage efficiency increases as more sites are added for geo-replication!

ECS’s geo federation capability manages a geographically distributed environment as a single logical resource. Dense storage from servers in multiple sites can be pooled and presented as a large consumable repository for Hadoop analytics. The obvious benefits are ease of management and the ability to use resources from multiple data centers efficiently and transparently.

It is imperative for business continuity to have the ability to detect and automatically handle temporary site/networks failures. During a temporary site outage (TSO), ECS reverts to an eventual consistency model while still allowing read access to the data on all sites.

Check out the Top Reasons handout to see the many benefits of Hadoop & ECS

Long story short…

Enterprises need the ability to gather and reason over the soaring quantities of information across the globe. ECS provides the globally distributed storage infrastructure for Hadoop while enabling continuous availability, greater resource utilization and consistency.

In future blog posts, we will discuss ECS’s Big Data Analytics capabilities in more detail while providing some real-world use cases.

Stay tuned!

Try ECS today for free for non-production use by visiting www.emc.com/getecs. You can also take our product for a test drive with our developer portal – quickly create a cloud storage service account and upload your content.