Stepping in the world of generative AI (GenAI) is like entering a new realm, filled with unique challenges and opportunities. Just as Dorothy needed guidance to navigate Oz, organizations must prepare their data centers to handle the demands of AI infrastructure.

The Emerald City’s Compute Requirements

The deployment of AI infrastructure presents significant challenges, starting with compute requirements, the heaviest of which are for model training. Even if an organization is not training models from scratch, the compute requirements for large language model inferencing—plus vector embedding for retrieval augmented generation, or RAG, and fine tuning—go well beyond those used for today’s applications.

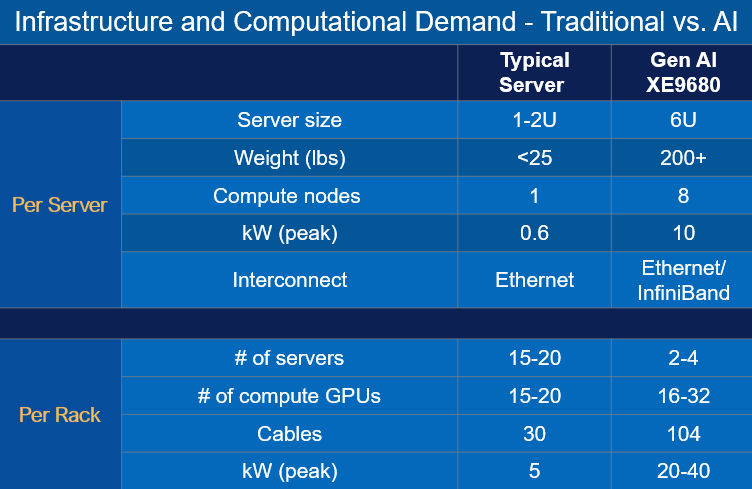

To meet these requirements, the physical size, weight, cabling, networking, power and cooling characteristics of GPU-powered generative AI servers are several times the corresponding specifications for standard servers. Careful planning is needed for organizations to get this AI infrastructure up and running in their data centers.

As an example, the Dell PowerEdge XE9680 server, which Dell has validated for inferencing use cases, is a 6U server with eight NVIDIA H100 GPUs. Due to its robust construction and cooling capacity, this server weighs more than 200 pounds. A rack with four XE9680 servers consumes 20 to 40 kW of power, contains more than 100 cables and weighs over 1000 pounds.

Depending on your needs and the scale of your AI deployment, you may choose to apply the recommendations described in this blog post to your data center as a whole or to a dedicated AI section of the data center.

The Scarecrow’s Brain: Data Center Capacity

In the classic story, the Scarecrow says he needs a brain, and his plan is to follow Dorothy to find the Wizard. In the world of AI infrastructure, it’s vital to have a plan for data center size and space allocation for server and rack installation, airflow optimization and maintenance.

Dell Services deployment specialists can work with your team to design the space to handle a large number of AI infrastructure racks efficiently and provide additional capacity for future expansion.

Arranging the racks to support easy maintenance access to servers and infrastructure is key to good data center design and applies to AI infrastructure, as well. Teams should establish a regular maintenance schedule, including regular checks and replacement of air filters, fans and cooling units as needed.

The Lion’s Courage: Effective Airflow Management

Airflow is critical to managing the heat generated by servers and infrastructure systems. AI infrastructure consumes far more power than traditional servers, generating more heat and making airflow and cooling even more important.

Organizations should utilize structured airflow management strategies such as hot and cold aisle containment and directing cool air directly into server inlets and hot exhaust air away from the equipment. This will increase cooling efficiency and reduce energy costs.

The Tin Man’s Heart: Advanced Power and Cooling

To support high-density GPU servers, it is crucial to evaluate power and cooling needs. Planning should include assessments of the total power requirements now and into the future, ensuring there are enough resources and backup systems in place to support operations without interruption. Data centers that have not been designed for the higher demands of AI infrastructure may not be equipped to handle GPU dense servers.

Consider investing in the latest power supply and transformer technologies that offer higher efficiency ratings. These not only reduce energy consumption but also minimize the environmental impact of the data center’s operations. Utilize uninterruptible power supplies (UPS) for emergency power and energy-efficient power distribution units (PDUs) to manage and distribute power effectively within the data center.

The Dell Team will help you assess cooling requirements to manage the heat generated by dense AI workloads. As AI workloads intensify, traditional air cooling may not suffice. Implementing liquid cooling solutions can significantly reduce the thermal footprint, enabling more efficient heat removal and allowing for stability and longevity with higher density configurations.

Toto’s Path: Cable Complexity, Layout and Organization

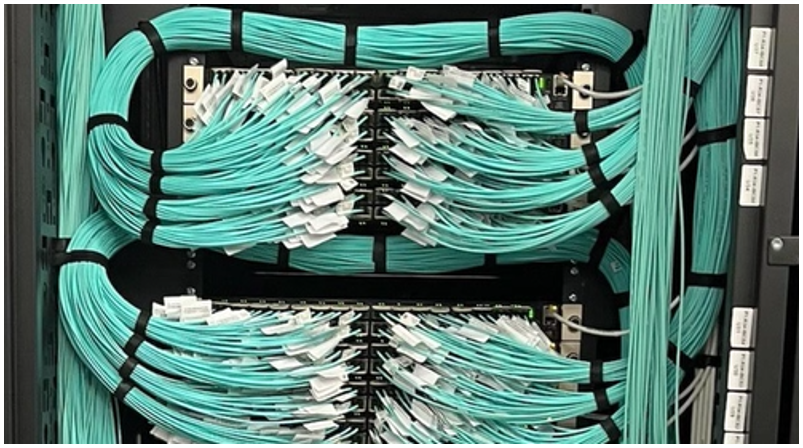

We couldn’t forget Toto! Like Toto navigating through the complexities of Oz, our AI deployment approach includes meticulous cable management solutions that support overhead routing and thermal management. Conveyance systems should be designed to separate power and data cables, minimizing interference and enhancing both safety and system reliability.

Within the rack, it’s important to reduce clutter to prevent air blockage and make it easy for technicians to locate the proper cable. Poorly routed cables can cause heat to build up and cause issues with switching infrastructure.

In addition, configuring a Gen AI “pod” often means one networking rack serves multiple GPU server racks, resulting in more numerous and longer inter-rack cables. To systematically organize this greater volume of cables and connections, best practices include design and implementation of a structured cabling and labeling system.

To accommodate future growth, deploy adjustable cable management systems like modular panels and adjustable racks. The Dell AI suite of AI professional services includes infrastructure deployment services to assist with cable layout and management.

To further simplify on-site deployment, Dell can build, configure, cable and test AI infrastructure at the factory, significantly reducing the amount of work that needs to be done at your data center.

Dorothy’s Wisdom: Considerations for the Disposal of Packaging

Dell is conscious of the environmental and logistical implications associated with the disposal of packaging. Choose recyclable or biodegradable materials for cable packaging and implement disposal protocols that prioritize sustainability, helping meet regulatory requirements and improving the data center’s environmental profile.

Organizations should also evaluate their data centers to find areas of opportunity to reduce power consumption (and subsequent cooling requirements) of existing infrastructure. This can help offset some of the needs of AI infrastructure and reduce the carbon footprint impact.

Dell solutions aim to minimize waste and manage disposal costs efficiently, ensuring that the deployment of AI infrastructure is as environmentally friendly as it is technologically advanced.

Get on the Yellow Brick Road to an AI-ready Data Center

As the main characters in “The Wonderful Wizard of Oz” overcame their challenges with a little help from their friends, Dell Technologies can help your organization successfully navigate the journey to a GenAI-ready data center with expert planning and support.

To learn more about preparing your data center for the new AI world, check out Dell Professional Services for GenAI or reach out to your Dell representative.