Data today is more distributed than ever before. Enterprises constantly move and consolidate data and compute assets across on-prem, cloud and edge, trying to strike a balance between data gravity, cost and availability of compute, security, business SLAs and scale of usage, to name a few. With newly emerging use cases and workloads such as GenAI, users are constantly exploring the right software and hardware stacks that can meet their requirements. This often means the end-to-end solution stack needs to be optimized for the scale, usage patterns and compliance requirements of an organization.

According to Gartner, more than 80% enterprises will have used GenAI by 2026, up from a mere 5% in 2023. This massive wave of adoption implies that users will seek quick and easy ways to explore capabilities and learn how to implement these outcomes within their organizations. This is especially true where cloud-based solutions offer a low entry barrier to get started. Dell recently launched the Dell APEX File Storage for Public Cloud – an enterprise-class, high-performance software-defined file storage solution that brings PowerScale OneFS software to your choice of public cloud. With common storage services across your locations creating a universal storage layer, it enables you to seamlessly move data between on-premises and the cloud. As on-prem, you experience storage performance, operational consistency and enterprise-class data services, so you can focus on innovation with world’s most flexible,¹ secure² and efficient³ scale-out file storage. Dell APEX File Storage is currently available on AWS and Azure.

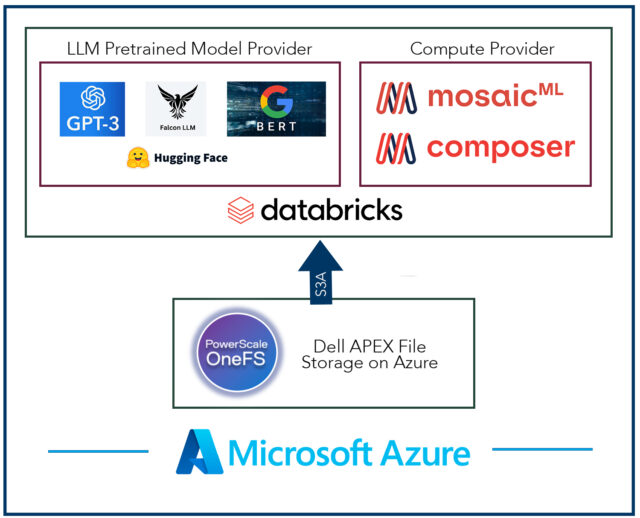

Dell has partnered with Databricks and Hugging Face to simplify how customers can get started on their GenAI journey. In this white paper, we demonstrate how users can use their structured and unstructured data stored in Dell APEX File Storage for Azure, along with the rich ML libraries from Databricks Mosaic AI and Hugging Face and LLMs of choice, to deliver AI-driven outcomes for their businesses. These include capabilities to train a new model, fine-tune an existing LLM or customize LLM responses through RAG (retrieval-augmented generation). The simplified management experience on cloud enables easy scalability and interoperability with the widest range of tools and technologies in the data and AI ecosystem.

The white paper showcases two key use cases:

-

- Use of Hugging Face transformer libraries for end-to-end LLM fine-tuning. We use different types of LLMs such as OpenAI GPT, Google BERT and Falcon. We tokenize the text data, train and optimize the model and save the fine-tuned model and tokenizer back into Dell APEX File Storage. Sample inferencing is run by reading the data and fine-tuned model from the file storage to generate appropriate text output.

- Use of Databricks Mosaic AI composer libraries for image recognition and classification using Rest Net architecture with TesNet-56. We use TorchVision’s dataset libraries to generate synthetic datasets for training and testing. These datasets are stored in the Dell APEX File Storage and accessed using S3A.

In both above use cases, the Spark cluster reads the fine-tuned data, and all compute and storage cluster input/output operations are facilitated through the Spark distributed computation framework, using S3A protocol.

As the capabilities and use cases for GenAI evolve with growing business needs, it’s important to invest in a future-proof data landscape that provides the scale and flexibility for enterprises to innovate at a steady pace. Learn more about Dell’s AI and Data management solutions.

1 Based on Dell analysis, February 2023

2 Based on Dell analysis comparing cyber-security software capabilities offered for Dell PowerScale vs. competitive products, September 2022.

3 Based on Dell analysis comparing efficiency-related features: data reduction, storage capacity, data protection, hardware, space, lifecycle management efficiency, and ENERGY STAR certified configurations, June 2023.