Flashback to when I was 10 years old. I’m trying to assemble two 11-person football teams on my football field designed rug using my toys as the players. I pull out old Star Wars figures, Evil Knievel, Stretch Armstrong and Bionic Man from the bottom of my toy chest. Luckily, my older sister didn’t throw away her old Barbie and Ken dolls, since I need them to complete my team; they made outstanding cornerbacks. While I might have changed my interest from action figures to sports, I’m so glad my sister and I didn’t get rid of our toys after only playing with them a few times.

This scenario is true. It is also a great analogy for why we collectively have a growing data gravity problem. I’ve made it my professional mission to help companies proactively solve their respective data gravity challenges (something akin to organizing and cleaning up my toy collection) before they become a completely unwieldy, data hoarding problem.

What is Data Gravity?

The concept of data gravity, a term coined by Dave McCrory in 2010, aptly describes data’s increasing pull – attracting applications and services that use the data – as data grows in size. While data gravity will always exist wherever data is collected and stored, left unmanaged, massive data growth can render data difficult or impossible to process or move, creating an expensive, steep challenge for businesses.

Data gravity also describes the opportunity of edge computing. Shrinking the space between data and processing means lower latency for applications and faster throughput for services. Of course, there’s a potential “gotcha” to discuss here shortly.

As Data Grows, So Does Data Gravity

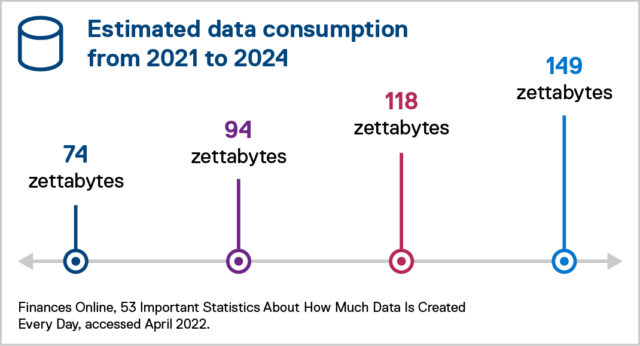

Data gravity and its latency-inducing power is an escalating concern. As the definition of gravity states, the greater the mass, the greater the gravitational pull. The ever-increasing cycle of data creation is staggering and is spurring a sharp rise in data gravity. Consider these estimates that state by 2024, 149 zettabytes will be created every day: that’s 1.7 MB every second.

What is a zettabyte? A zettabyte has 21 zeroes.

What does 21 zeroes equate to? According to the World Economic Forum, “At the beginning of 2020, the number of bytes in the digital universe was 40 times bigger than the number of stars in the observable universe.”

Consequently, this data growth forecast is impactful and will continue to impact data gravity – in a massive way. As with most situations, data gravity brings a host of opportunities and challenges to organizations around the world.

Data Gravity’s Hefty Impact on Business

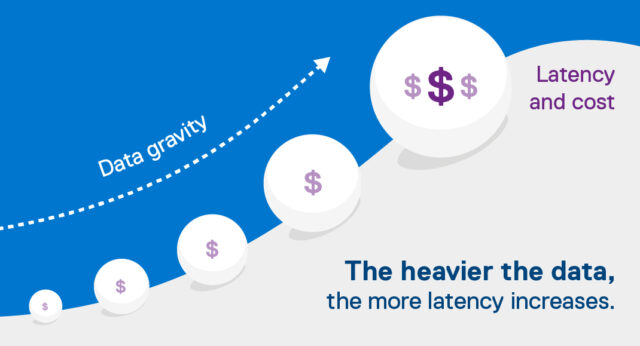

Data gravity matters for two reasons: latency and cost. The heavier the data, the more latency increases. More latency means less throughput – which increases costs to organizations. Reactive remedies create additional expense for businesses because moving data is not easy or cheap. In fact, after a certain amount of data is amassed, movement may not be feasible at all.

A simplified view of data gravity’s “snowball effect” is shown here:

Balancing Data Gravity

Data gravity, however, is not all bad. It all depends on how it’s managed. As with most things in life, handling it is all about balance. Consider two scenarios in the context of data gravity: centralized data centers and data creation at the edge.

-

- Centralized data center – In a centralized data center, the data storage and servers hosting the applications and data management services are in close proximity. Administrators and storage specialists are available to keep them side-by-side. Traditional applications, such as relational databases, backup and recovery and services must be continuously updated to adapt to faster growing data.

- Data creation at the edge – Edge locations inherently help to achieve lower latency and faster throughput. According to IDC, data creation at the edge is catching up to data creation in the cloud as organizations move apps and services to the edge to boost compute performance. However, as edge locations grow, onsite administrators generate more data at these locations. As a result, data stores grow and grow, often in space-constrained environments. As edge locations grow, each one can compound complexity. Ultimately, organizations can evolve to have terabytes or petabytes of data spread out across the globe, or beyond (i.e., satellites, rovers, rockets, space stations, probes).

The bottom line is that data gravity is real and its already significant impact on business will only escalate over time. Proactive, balanced management today is the must-have competency for businesses of all sizes around the world. This will ensure that data gravity is used “for the good” so that it doesn’t weigh down business and impede tomorrow’s progress and potential.

A Next Step

The data gravity challenge remains, both across data centers and edge locations. In an upcoming blog, we will look at what needs to be done about data gravity – across data centers and edge locations. The data gravity challenge remains. It is location-agnostic and must be proactively managed. Left unchecked, data gravity snowballs into bigger issues. Can’t figure out how to move ten tons of bricks? Put it off and you’ll have to move twenty, forty, or one-hundred tons.