Are you ready to reap the benefits of Big Data?

Have you decided on:

- Various types of infrastructures – bare metal, converged, hyper-converged, cloud, hybrid?

- Various storage and data management tools – Apache Hadoop, Cloudera, Hortonworks, Cassandra, MongoDB?

- Data mining solutions – Rapid Miner, Kaggle, OptiMove?

- Data Analysis tools – Quobole, Domo, MicroStrategy, Big ML?

- Data Integration tools – Pentaho, Informatica, Stitch, Magento, Blockspring

- Visualization tools – Tableau, Silk, CartoDB, Chart.io, Google chart?

- Languages – R, Python, RegEx?

Also, are you clear on structured and unstructured data, graph databases, in-memory databases, distributed databases, privacy restrictions, security challenges and the emerging field of Internet of Things?

Once you understand and decide on everything above, you are ready to reap Big Data benefits! Isn’t this confusing, daunting and downright scary? I have only taken a small subset of tools and technologies available in the market to make my point. But the world of Big Data is rich with an array of complementary, competing and confusing technologies. It is difficult for a typical business to fully grasp these technologies, conduct research, align them with their requirements, implement and finally start using them.

The confusion is akin to Chaos described in Greek Mythology.

The confusion is akin to Chaos described in Greek Mythology.

Theogony‘s version of the genesis of the Greek gods begins with just Chaos – a collection of random, unordered things or a void. From Chaos came Gaea (Earth), Eros (Desire), Uranus (Heaven) and Pontus (Sea). Uranus and Gaea’s union produced 12 titans, including the youngest, Cronus.

Uranus feared his children overthrowing him, so he pushed them back into the womb of Gaea. To retaliate, Gaea gave Cronus a sickle. Cronus maimed his father then rescued his 11 siblings. Cronus and Rhea gave birth to several children who came to be known as Olympian Gods.

Like his father, Cronus came to fear his children overthrowing him and likewise started to swallow his children. To save her youngest child, Zeus, Rhea tricked her husband and sent Zeus to Crete for protection.

As prophesied, when he reached manhood, Zeus rescued his five elder siblings and successfully waged war against his father and overthrew Cronus, casting him and the other Titans into the depths of the Underworld. From then on, Olympian gods ruled the world!

I want to contrast this classic story with the rise of the complex Big Data environment that includes several versions and combinations of platforms and hundreds of tools.

How do we make sense of this Chaos? There is a wide spectrum of solutions to address this from DIY (Do It Yourself) to Pre-engineered packaged solutions. Both extremes have their own pitfalls.

Many DIY deployments start with software like Hadoop installed on basic servers with direct-attached-storage. As data and analytics activities grow the storage and computation resources becomes constrained. Many Hadoop clusters come into existence based on the budgeting restrictions and politics of an organization. As a result, the management of many environments becomes expensive and inefficient.

Many pre-engineered solutions require that the existing infrastructure and solutions in an organization are tossed aside and replaced with the new solution. This is impractical on several fronts – many organizations have made considerable investments in the existing infrastructure and also implemented several useful applications with their customizations. On top of this, there is no assurance from the new packaged solutions that they will work and scale per the requirements of the organization.

Organizations are in a fix. On one hand, they need assistance with cost and maintenance of the existing DIY solution, but on the other hand they are reluctant to write off existing investments to enable pre-engineered solutions

What is the way out?

The solution is Dell consulting’s Elastic Data Platform (EDP) approach. It augments the existing infrastructure in an organization to provide an elastic and scalable architecture. It includes containerized compute nodes, decoupled storage and automated provisioning.

Elastic Data Platform: Definition

The EDP solution approach employs containers (Docker) to provide the compute power needed along with a Dell Isilon storage cluster with HDFS. This is all managed using software from BlueData, which provides the ability to spin up instant clusters for Hadoop, Spark, and other Big Data tools running in Docker containers.This allows users to quickly create new containerized compute nodes using predefined templates, and then access their data via HDFS on the Isilon system. With a containerized compute environment, users can quickly and easily provision new Hadoop systems or additional compute nodes to existing systems – limited only by the available physical resources. By consolidating the storage requirement onto an Isilon storage cluster, the need for redundant storage is reduced by a 3x replication factor to a 20% overhead. It further enables the sharing of data across systems and extends enterprise-level features such as: snapshots, multi-site replication, and automated data tiers to move data to appropriate storage tiers as the data intensity changes over time.

After implementing a containerized Big Data environment with BlueData, EDP deploys a centralized security access policy engine (provided by BlueTalon). It then creates and deploys a common set of security policies via enforcement points to all of the applications accessing the data. This ensures the definition and enforcement of a consistent set of rules across all data platforms, ensuring data governance and compliance, by only allowing users or applications access to the data to which they are entitled.

The result is a secured, easy-to-use, and elastic platform for Big Data with a flexible computer layer and consolidated storage layer that delivers performance, management, and cost efficiencies unattainable using traditional Hadoop systems.

Elastic Data Platform: Principles

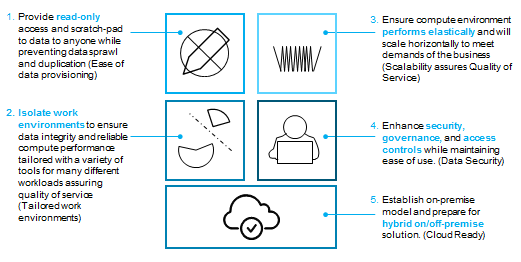

There are 5 key principles used to guide the deployment of the Elastic Data Platform:

- Easy Data Provisioning: provide read-only access and scratch pad data to anyone within the organization while preventing data sprawl and redundancy.

- Tailored Work Environments: isolate environments between users to ensure data integrity and reliable compute performance tailored with a variety of tools for many different workloads – assuring quality of service.

- Scalability: ensure compute environment performs elastically and scales horizontally to efficiently meet business demands and deliver high quality of service.

- Data Security: enhance security, governance, and access controls while maintaining ease of use.

- Cloud Ready: establish on-premises model while preparing for hybrid on/off-premise solution.

Elastic Data Platform: Solution Details

Separating Compute and Storage

Although decoupled storage is not required with the Elastic Data Platform, once the data set becomes large, Dell’s Isilon solution offers a compelling ROI and ease of use with scalability. Isilon provides several capabilities that extend the value of the Elastic Data Platform:

- Separation of the storage allows for independent scaling from the compute environment

- Comprehensive data protection using Isilon Native erasure encoding reduces the protection overhead from 2X to 0.2X.

- Creation of read-only snapshots, on some or the entire data set, effectively requires no additional storage space.

- Auto-tiering of data (i.e. Hot, Warm, and Cold) maximizes performance/throughput and cost effectiveness.

Deployment and Automation

When deploying a cluster, BlueData enables the allocation of the compute clusters while Isilon HDFS provides the underlying storage for the compute clusters. Clusters can be deployed using standard profiles based on the end users’ requirements (e.g. a cluster could have high compute resources with large memory and CPU and have an average throughput storage requirement).

Decoupling storage and isolating compute provides the organization with an efficient and cost effective way to scale the solution providing dedicated environments suited for the various users and workloads coming from the business.

Tenants within BlueData are logical groupings that can be defined by organization (i.e. Customer Service, Sales, etc.) that have dedicated resources (CPU, Memory etc.), and can then be allocated to clusters. Clusters also have their own set of dedicated resources (coming from the tenant resource pool).

Applications that are containerized via Docker can be made part of the BlueData App store and can be customized by the organization. Those application images are made available to deploy as clusters with various “Flavors” (i.e. different configurations of compute, memory, and storage.)

The data residing on HDFS is partitioned based on rules and definable policies. The physical deployment of the Isilon storage allows for tiering, and the placement of the data blocks on the physical storage is maintained by definable policies in Isilon to optimize performance, scale, and cost.

Isilon is configured to generate read-only snapshots of directories within HDFS based on definable policies.

Users gain access to the data through DataTaps in BlueData. These DataTaps are associated with Tenants and are mapped (aka “mounted”) to directories in Isilon. These DataTaps can be specified as Read-only or Read/Write. DataTaps is configured for connection to both the Isilon Snapshots and writeable scratch-pad space.

Once users have finished their work (based on informing the administrators that they are finished, or based on their environment time being up), the system frees the temporary space on Isilon and adjusts the size of the compute environment so that those resources can be made available to other users.

Centralized Security Policy Enforcement

The difficulty many organizations face with multiple users who access multiple environments, with multiple data analysis tools and data management systems, is the consistent creation and enforcement of data access policies. Often, these systems have different inconsistent authorization methods. For example, a Hadoop cluster may be Kerberized, but the MongoDB cluster may not be, and the Google BigQuery engine would have its own internal engine. This means that administrators must create policies for each data platform and independently update them every time there is a change. In addition, if there are multiple Hadoop clusters or distributions, then the administrator must define and manage the data access of each one independently and risk inconsistency across the systems.

The solution is to leverage a centralized security policy creation and enforcement engine, such as BlueTalon. In this engine, the administrator simply creates policies once by defining the access rules (i.e. Allow, Deny, and Mask) for each of the different roles and attributes for the users accessing the system. Then, distributed enforcement points are deployed to each of the data systems that enforce the centralized policies against the data. This greatly simplifies the overall Big Data environment and allows for greater scalability while maintaining governance and compliance without impacting user experience or performance.

Elastic Data Platform: Conclusion

The Elastic Data Platform approach from Dell is a powerful and flexible solution to help organizations get the most out of their existing Big Data investments while providing scalability, elasticity, and compliance to support the ever-growing needs of the business. Based on key principles, the approach provides the ease and speed provisioning that the business needs, the simplicity of deployment and cost sensitivity IT requires, while ensuring that everything follows the governance and compliances rules required by the organization.

Organizations are ready to move from Chaos to the Olympian Gods. With the Elastic Data Platform any organization can embrace a better solution – without worry about existing investments and valuable customizations.

Some might wonder as to what might be the best time to begin their journey to Olympian Gods. Well, there is a Chinese saying that the best time to plant to tree was twenty years ago! But the next best time to plant a tree is now!

What are you waiting for?