For over a year, I’ve been writing blogs about composable infrastructure, which is the idea that data centers ought to be defined by the needs of applications – not by the ideas of a data center admin or an IT executive. And, why is this important? As workloads become more dynamic, infrastructures have had to become more dynamic as well. Composable infrastructure turns physical infrastructure into pools of modular building blocks that workloads can use, as needed, to provide a service.

Previous blogs:

- Memory Centric Architecture Vision

- Gen-Z – An Open Fabric Technology Standard on the Journey to Composability

- Reality Check: Is Composable Infrastructure Ready for Prime Time?

- A Practical View of Composable Infrastructure

However, I keep encountering confusion and myths about composable that need to be addressed. For example, the other day I had a customer ask about a rack scale solution that allowed 32 standard two-socket servers to turn into a true 64-socket SMP (symmetric multiprocessing) machine magically through this thing called composable or rack scale systems – this is a myth BTW.

It’s becoming more and more evident that we have a composable hype problem. While the OEM architecture community (Dell, HPE, Lenovo, Huawei, Cray, IBM…) is working to address the architectural needs via collaboration in the Gen-Z consortium it appears to me some in the industry are confused as to what is possible and what is hype.

So let’s clarify what we’re exploring, its value and where we are on the journey. And, since this blog is long, here’s the “Cliff Notes”:

- First, this blog in no way implies that Dell doesn’t value or have composable infrastructure offerings- it’s implying the opposite. Composable infrastructure – and Dell offerings – allow organizations to be more agile, have lower operational costs and accelerate innovation.

- That being said, there’s a lot of marketing hype that talks about composable and the nirvana end state without distinguishing the two. That’s creating a lot of confusion.

- OEM architects know that composable isn’t a full-fledged set of capabilities today (as evident through their involvement in Gen-Z). That nirvana has not been reached and your warning alarms should go off for any vendor who tells you otherwise.

- Achieving the nirvana state or what I call “full composability” means customers can disaggregate cores, DRAM, storage class memory, accelerators, storage and networking. No one can disaggregate the components on the server to date, and the industry must embrace a memory-centric architecture with disaggregation to make this a reality.

- While there is plenty of marketing hype, Dell is working across the industry to bring together an ecosystem of partners to deliver the full potential of composable infrastructure.

Composable Definition & Benefits

To begin, let’s (again) address the question: What is composable?

“Composable” is a service-centric model with a reassignment of heterogeneous infrastructure resources to rapidly meet the needs of variable workloads. It has software-defined characteristics applied to hardware modules (network/storage/compute) with simplified and automated administration to implement and manage the dis-aggregated infrastructure. It seeks to dis-aggregate compute, storage, and networking fabric resources into shared resource pools that can be available for on-demand allocation.

The move towards composable is all part of the evolution toward a more modular, integrated, fluid infrastructure. At the end of the day, composable infrastructure is about providing greater business agility and unlocking efficiencies that just aren’t possible today. What does this mean exactly?

- For customers, this means they can finally capitalize on the promise of “pay-as-you-go” from the end-to-end consumption model of IT.

- For end users, this means being able to dynamically adjust IT resource consumption as the business needs fluctuate.

- For IT provider, this means being able to efficiently orchestrate the business demands across their infrastructure without the need to physically setup or reconfigure hardware resources to maintain competitiveness.

By all accounts, composable can and will have high value. Imagine assembly of the perfect IT infrastructure – optimized ratio of cores, memory, accelerators, storage, and networking plus a management and orchestration layer that simply instructs the infrastructure to aggregate the right resources in the right place at the right time, and then return all the disaggregated assets back when completed for the next job.

This is a great idea, but the technology isn’t there yet. This level of agility cannot be fully realized until systems are completely disaggregated and virtually stitched back together to form a pool of resources, where applications and workloads can be orchestrated across these pools. From this point forward, I will refer to this nirvana state as full composability.

Composable infrastructure as we know it today

The industry is heading in the direction of full composability but it does not exist today. Below is a quick look at what the industry can and cannot compose today:

|

What can the industry compose today? |

What can’t the industry compose today? |

|

|

|

|

|

|

|

|

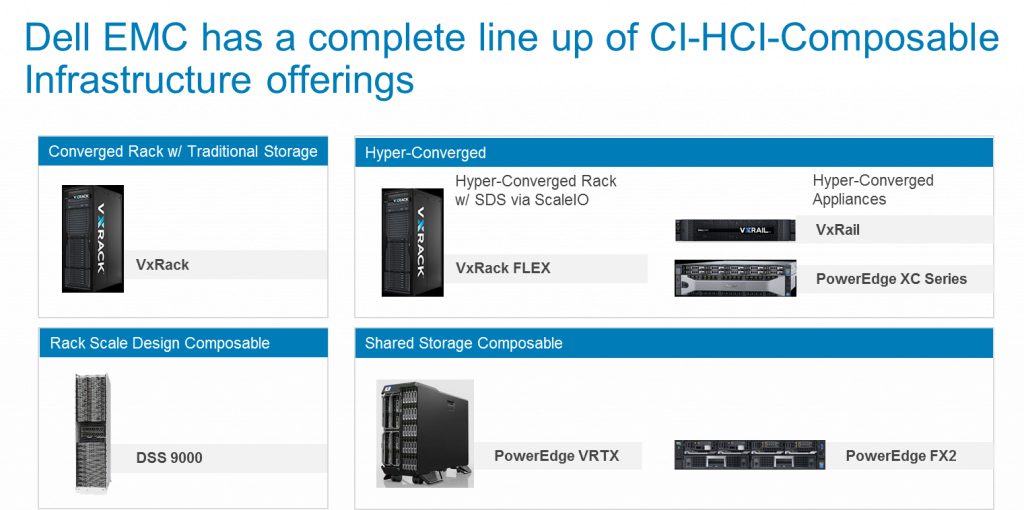

And, here is Dell’s full portfolio that spans what is composable today:

What needs to happen for full composability?

Getting to a fluid, dynamic infrastructure is a journey and Dell is accelerating that shift while working with the industry to re-invent both hardware and software aligning with a broad vision for composable infrastructure.

On the hardware side, accelerators, IO and memory inside the server are the least composable resources today because we don’t have technology available for dis-aggregation down into the memory semantic world. The difficultly lies in the elements we are trying to dis-aggregate – it’s all about bandwidth & latency.

- Slow resources (micro seconds) like storage/network have thick protocol stacks (TCP/IP, iSCSI, NFS…), where layering software on top does not impact overall performance. In case of HCI/SDS it can enhance performance because of local data.

- Fast resources (nano seconds) that live in the memory domain (DRAM, storage class memory, GPUs/FPGAs) talk native CPU load/store, not protocol stacks. By not having protocol stacks on the fast devices, the resources that live in the memory domain remain in the native language of the CPU load/store – yet it’s these fast resources that are critical to achieving full composability.

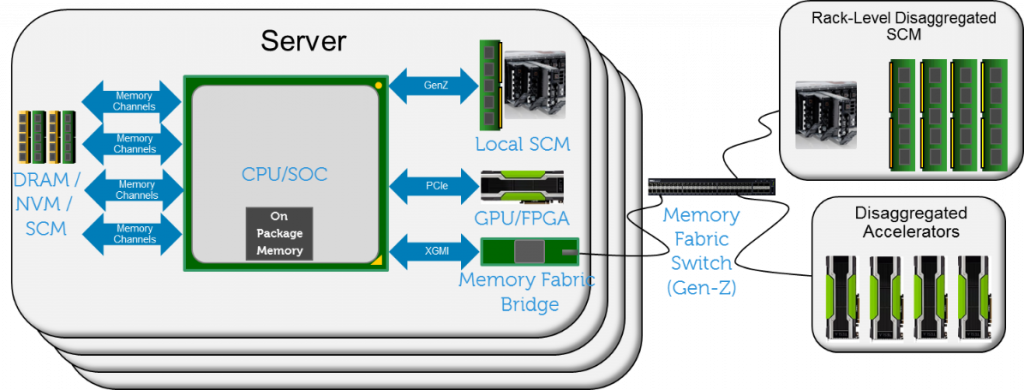

So the question becomes: how do you keep the latency down for these fast devices? You dis-aggregate using a memory centric architecture and a memory semantic fabric like Gen-Z. Below is an image of what this would look like.

You may hear people say that they’ve cracked the interconnect problem, but what speak volumes is that all the OEMs are working together architecturally for a memory semantic fabric which is needed for dis-aggregation and full composability. You may hear other people say PCIe is capable of this, but PCIe was never optimized nor does it have the capabilities to enable a memory semantic fabric needed for dis-aggregation. Many have tried to make it a shareable fabric and failed – again, why Gen-Z was created.

Beyond the hardware, the software evolution is in progress, because full composability requires that third parties be involved with open APIs — which is why the industry through DMTF just added composability support to Redfish. SNIA Swordfish has emerged as an extension of the DMTF Redfish specification, so the same easy-to-use RESTful interface is used to seamlessly manage storage equipment and storage services. As part of DMTF, Dell has been active in shaping this future for the good of our customers and the industry.

Back to Today

Today, composability is tackled by “grouping”. A great example of this is our Dell DSS 9000 rack scale infrastructure. Designed with rack level management, the DSS 9000 uses open and industry standard APIs including DMTF Redfish and SNIA Swordfish. Along with Intel® Rack Scale Design (RSD), the DSS 9000 is designed to:

- Ensure interoperability with heterogeneous systems

- Enable compute, storage and networking resources to be grouped together, provisioned and managed as one

- Allow customers to compose systems from the rack and across the datacenter

- All while working in the cloud environment of choice – whether its OpenStack, VMware, Microsoft or a custom orchestration platform

The below image helps illustrate this point.

How to complete the journey?

So how do we complete the journey to full composability? Let me say it again. We must embrace industry efforts toward a memory-centric architecture so we can dis-aggregate down to the memory centric world (DRAM, SCM, GPUs, etc.).

The industry is on the right path through industry collaboration on Gen-Z, efforts in DMTF with Redfish and SNIA’s SwordFish. Every quarter, we are getting closer to enabling such an architecture, but the industry isn’t there right now.

The hype on composable is high. The good news is this reaffirms the industry and Dell that we’re working on the right problem. The bad news is that the composable discussion lacks the much needed context of what’s possible and not possible today. Rest assured though, Dell will continue to work across the industry to bring together an ecosystem of partners to deliver the full potential of composable infrastructure.